Patterns and Practices, Stockholm 2018 - @MasashiNarumoto, @fkc888, @EPGLEAP

Patterns and Practices, Stockholm 2018

Introduction

These are our best observations when talking to customers in the field. We welcome you to challenge our observations if you have different experiences. We're here, not only to share knowledge, but also to learn from you.

In the envelope, there's a sales pitch of how to attend Leap (Jan 28th - Feb 1st 2019). Two tracks: Architecture or Developer.

Designing microservices using serverless technology

Masashi Narumoto, Principal Program Manager

Serverless = Most of the workflow can be done from the client side, accessing APIs. We care, because we don't need to manage servers. If we have peaks and troughs, we don't have to manage scaling. This means that we might experience cost benefit during throughs.

SOA vs Microservices - the biggest diference is the goal. In SOA, once you developed one service, everyone was supposed to reuse this service. Web Service (like UDDI) standards supported this. With Microservices, the goal is easy deployment and replacability - we want to be able to quickly replace a version of a service with another.

Microservices are not the best architectural patterns for everything - if you are running a simple web site, you probably will not benefit from breaking it up into 10 microservices.

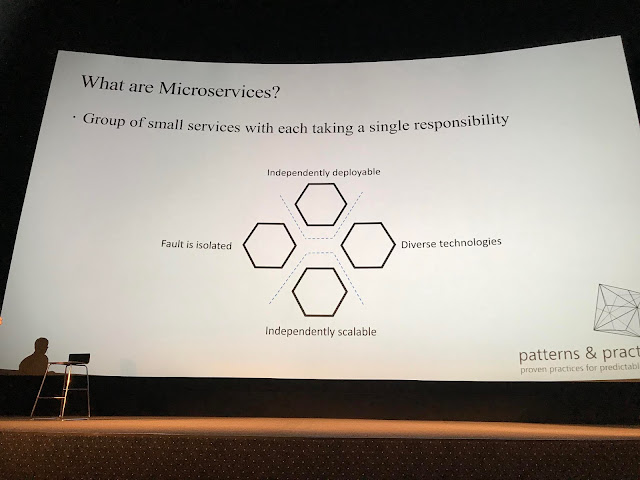

A microservice is an independently deployable, independently scalable unit, where fault is isolated. A microservice is also isolated behind an API or within a container, you can use diverse technologies, enablign you to use the right tool for the job (or that fits your development organization).

One of the core functions in a microservice-based system, is an API gateway - something to route the user's requests to the right backend service (especially, since they are designed to be replaced/moved quickly).

You should configure scale sets for workflows that have different scalability characteristics - if you realize that you need to be able to independently scale your API from your processing, put them in different scalesets.

With Service Endpoints, you can ensure that your communication with datastores (and other services), occurs within the Azure network, as opposed to the public internet, making the communications much more secure.

We recommend using Azure monitoring.

Potential issues with traditional microservices (wow, "traditional" already!) include managing storage and messaging, maintaining different environments (dev, test and prod), partial auto-scaling (many services might auto-scale out, but do not auto-scale in (often to ensure reliability), or auto-scale deployment units (e.g. PODs), but do not auto-scale the computational nodes they run on) and cost (paying per hosting nodes).

5 of 9 customers in this region, uses an architecture depicted above. Whenver a new document or image is submitted, it gets stored in blob storage. Then, there's a Function is validating the data and split it into distinct part. From the divided data, multiple Functions trigger analysis tasks and store aggregations.

If you have higher throughput than 10,000 files per seconds, store an event in a queue as well. Event Grid solves this issue.

The benefits of serverless we see in these architectures, is an abstraction from servers, less infrastructure maintenance, self auto-scaling (you don't have to care), pay per execution and loosely coupled components, driven by events.

The challenges are

- less control

- limited resources

- cold start issues (your functions are "booted up" / "woken up" / loaded into memory. This happens after 20 minutes of inactivity when they have been deallocated to optimize resource utilization)

- accumulated expenses where a resource subscription would be cheaper than pay per execution.

Lastly, the ecosystem is still immature. If you expect a constant high-throughput, it's more beneficial from a cost perspective to have a dedicated server. Also, serverless tend to lock you more into a vendor, since you are relying more on their specific services.

The point here, is the workload: In your app, you likely have both event driven and other workloads, meaning that you will need to utilize both approaches to gain the most benefit.

Depending on granularity, you could argue that multiple functions are a part of the same logical microservice. In Azure Functions, a Function App is a boundary of service instance. It's a deployable unit. Within the Function app, you use one language. It's my experience that one team manages one function app, and thus can agree on schema changes.

When you deploy function A, function B and C are automatically recycled. The functions scale independenty however:

You need to pay attention to the dependencies you use. In the image above, we illustrate more users, causing more functions to instantiate, all using an under-dimensionized data store.

Since an app is a singe deployable unit, we recommend declaring shared resources as HTTP, Redis and CosmosDB clients as a singletons, shared by each function instance.

One benefit of using Functions with Event Hub, is that the function runtime will automatically scale up and down to handle the event hub load.We recommend reading data in a batch (input bindings to arrays, lists, collections) when you react to a trigger, as opposed to dealing with each data item individually.

Attach a correlation id to your requests, so that you can track them throughout your various endpoints (API - SVC - Storage).

For inter-service communication use Storage queues in order to benefit from the competing consumer pattern. This will give you retries and poison message handling for free.

Prefer Choreography over Orchestration: Each function independently picks works from a queue and reports readiness (

completed transaction ABC-123). Then, a function downstream reads the completion messages, deciding when the transaction is completed.

Linkerd gives you correlation id in http header. Your reponsibility is to populate this Id throughout your calls and logs.

You can accomplish the same by populating Application Insight's

OPERATION_ID.

Add a security layer to your application by pulishing an API Gateway that exposes your functions. The functions, then, can expose their APIs privately.

Versioning is extremely important. To minimize downtime, we recommend the following flow:

With an upcoming release, functions will support deployment slots, just like App Services, minimizing downtime even further. Here, again, an API Gateway (Azure API Management) helps by acting as an indirection, buffering and retrying requests.

Serverless reference architecture

Francis Cheung

With a Reference Implementation, we build a codebase to simulate a problem in the wild, in order to eventually produce a Reference Architecture. It's intentionally not complete.

If you have an app service plan already that is underutilized, put some functions in it! If you need to run your functions continously, need a lot of CPU and memory or run functions longer than 10 minutes, you want to use a dedicated app service plan. Further, with dedicated, you can connect to your on-premises network. The dedicated also supports separate deployment slots, that you can warm up and swap (we recommend deployment, staging, last known good (production)).

On the other hand, if you have an application that is occasionally used (asynchronous burst loads), pick the consumption plan. It uses a scale based on triggers and can scale up to a max of 200 instances. Our testing has shown cold starts between 3-11 seconds. To keep your function app running, add a timer triggered function that executes every 19 minutes. It's a cheap way to keep the application warm.

We recommend using Managed Service Identity.

In the dedicated plan, you have control of how you scale out (i.e. how many function instances are started). In the consumption plan, scaling depends on the trigger and its heuristics (e.g. queue length).

Common IOT scenarios involve sending sensor data as soon as it's available (partial data) and point-in-time data (keyframe) every so often (all the sensor's current values). Protobuf is a popular binary format for this.

Three bindings support retries, if you were to throw an exception in your function code: Azure Blob Storage, Azure Queue Storage, Azure Service Bus. See https://hackernoon.com/reliable-event-processing-in-azure-functions-37054dc2d0fc

In Cosmos DB, we have found that we spend

- 1 RU per read operation

- 6-10 RUs per write operation

So an upsert costs 7-11 RUs.

In order to unit test our function apps, we found that creating "processors" with property injection that abstracts the function execution.

I highly recommend instrumenting your code (see the reference implementation for

.TrackValue snippets).

You can request a maximum batch size from Event Hub. However, if you have a slow stream of events, you will get smaller batches.

API management provides a correlation/operation ID that you can use to track your request.

Access to

Query object from function declaration?

Managed Services Identity lets you access your keyvault from a service, giving your application access to the resources it needs without you having to deal with usernames and passwords. See https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/services-support-msi

Use the KeyVaultClient to access the vault until there are proper bindings for Azure functions.

To increase performance, you often need to increase parallelization, using e.g. eventhub partitions. The clients will automatically retry when receiving

429 - TOO MANY REQUESTS as a band-aid for spikes (built on AutoRest), but you need to monitor and instrument your application to learn when you need to scale up (pay for more).

Choosing the right technology

Musashi Narumuto

- N-Tier = Virtual Machines

- Event driven = Functions

- Microservices = AKS/SF

To ingest and store data, consider Event Hub Capture. To deliver data without compute, consider serving content directly from storage, with an intermediary CDN.

The App Service Container support, can be utilized for web apps, web jobs or, really anything.

See containers as compliment to WebJobs.

We recommend separate storage accounts for logs, queues and static content. Azure search for quick search.

One of Microsoft's largest customers, use the below architecture and report that 98% of the requests go through the static (cheap) part of the system.

Again, consider Event Hub capture to ingest and store data.

You should consider deploying a readiness probe to give more detailed health information to the cluster.

SQL Managed Instance lies between VMS and Database. We are missing Mongo API (a subset) and Table API in this chart.

For logging, we used to recommend Blob. Now, we recommend Azure Monitor (through App Insights). It will store your logs for 90 days, whereafter you can export the logs to blob storage for archive purposes.

Istio is a specialized solution for intra-service messaging otherwise solved by service bus or queues.

Use a marker message with multiple entries. Retry transaction 2 a number of times before executing a compensation action (rollback) against database 1.

The function proxy is a lightweight alternative, if you can get away with simple routing.

Designing high throughput event driven solutions

Francis

Hot path for real-time alerts Warm path for near-time analysis and dashboards Cold path for over-time analysis (month over month, year over year)

The same data is being written to all three paths and dealt with in different ways (alerts, geospatial queries, dashboards).

Stream Analytics is a great way to Filter data and move through or to aggregate data over time windows. A streaming job may go down unexpectedly, why you need good monitoring here (as well).

The PartitionId comes is preserved from EventHub or IOT Hub.

By default, TIMESTAMP is the ingestion timestamp and not the timestamp of the actual event. By providing your own timestamp, you can use that instead. Use the OVER-clause to isolate wallclock differences between devices (e.g. if they're wrongly configured as years in the future/past).

Store business events together with application telemetry in Azure Monitor so that you can correlate and query. You can connect Grafana to this data, if you want fancier graphs.

To see RU consumption per partition (e.g. to figure out throughput issues to a CosmosDB where you've defined partitioning, but forgot to pass the partitioning value in your code), see Cosmos DB - Metrics -

Database - Collection - Bottom-right:

Comments